Abstract

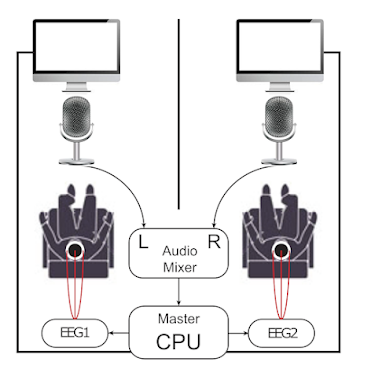

During a conversation, the neural processes supporting speech production and perception overlap in time and, based on context, expectations and the dynamics of interaction, they are also continuously modulated in real time. Recently, the growing interest in the neural dynamics underlying interactive tasks, in particular in the language domain, has mainly tackled the temporal aspects of turn‐taking in dialogs. Besides temporal coordination, an under‐investigated phenomenon is the implicit convergence of the speakers toward a shared phonetic space. Here, we used dual electroencephalography (dual‐EEG) to record brain signals from subjects involved in a relatively constrained interactive task where they were asked to take turns in chaining words according to a phonetic rhyming rule. We quantified participants’ initial phonetic fingerprints and tracked their phonetic convergence during the interaction via a robust and automatic speaker verification technique. Results show that phonetic convergence is associated to left frontal alpha/low‐beta desynchronization during speech preparation and by high‐beta suppression before and during listening to speech in right centro‐parietal and left frontal sectors, respectively. By this work, we provide evidence that mutual adaptation of speech phonetic targets, correlates with specific alpha and beta oscillatory dynamics. Alpha and beta oscillatory dynamics may index the coordination of the “when” as well as the “how” speech interaction takes place, reinforcing the suggestion that perception and production processes are highly interdependent and co‐constructed during a conversation.